논문제목: Ganin, Yaroslav, et al. “Domain-adversarial training of neural networks.” The journal of machine learning research 17.1 (2016): 2096-2030.

The main purpose of this paper is to handle the task which has target domain distribution differ from training domain. The easiest way to solve the domain difference is to augment the data. However, we cannot predict all the changes in the target domain. Other leading methods, which use auto-encoder as two steps, are heuristic and not efficient. The proposed DANN (Domain Adversarial Neural Network), uses feature-based methods based on adversarial training. They insist that this method is a principled way to solve domain adaptation task. The proposed method also can be used in almost any feed-forward model, by augmenting it with few standard layers and a new gradient reversal layer.

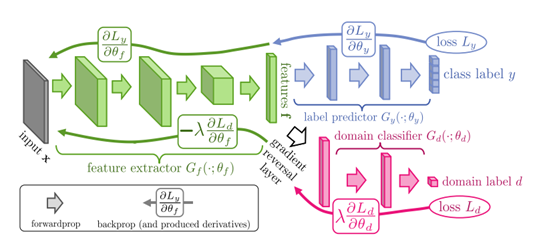

DANN trains focusing on features that are independent from the domain differences. There are two updating paths.

1) Discriminative for the main learning task on the source domain

2) Indiscriminate with respect to the shift between the domains (reverse back-propagation)

The main strategy goes with lower the adaptation target error below, so that the upper-bound of the target error can be lower. Adaptation target error can be represented by total variation between source and target domain.

The bound on the target risk can be lowered by lowering the empirical estimate dh(S,T) and source risk. Since the rest term is depends on the VC dimension of H, which has lowest error, they consider it as constant value.

Also, they consider the symmetric difference hypothesis space to compute the empirical H-divergence between source and target domain. This divergence term can be considered as regularization, adding a domain adaptation term simultaneously to the objective of the network. Indiscriminate with respect to the shift between the domains can be achieved by making dh (S, T) to zero. This regularization Complete optimization objective is as below.

As you can see in the complete network figure below, the neural network (parameterized by W, b, V ,c) which is the main classification task on the source domain, and the domain regressor (parameterized by u, z) which helps to extract the feature that is independent with the domain, are computing in an adversarial way.

DANN shows successful results in classification problems, achieving state-of-the-art domain adaptation performance.

'컴퓨터공학 > 딥러닝 논문리뷰' 카테고리의 다른 글

| Feature-weighted linear stacking. (0) | 2021.12.01 |

|---|---|

| DeepSIM: Image Shape Manipulation from a Single Augmented Training Sample (ICCV 2021 Oral) 리뷰 (0) | 2021.12.01 |

| Xgboost: A scalable tree boosting system (0) | 2021.12.01 |

| Lightgbm: A highly efficient gradient boosting decision tree (0) | 2021.12.01 |

| beta-vae: Learning basic visual concepts with a constrained variational framework 요약 (0) | 2021.12.01 |